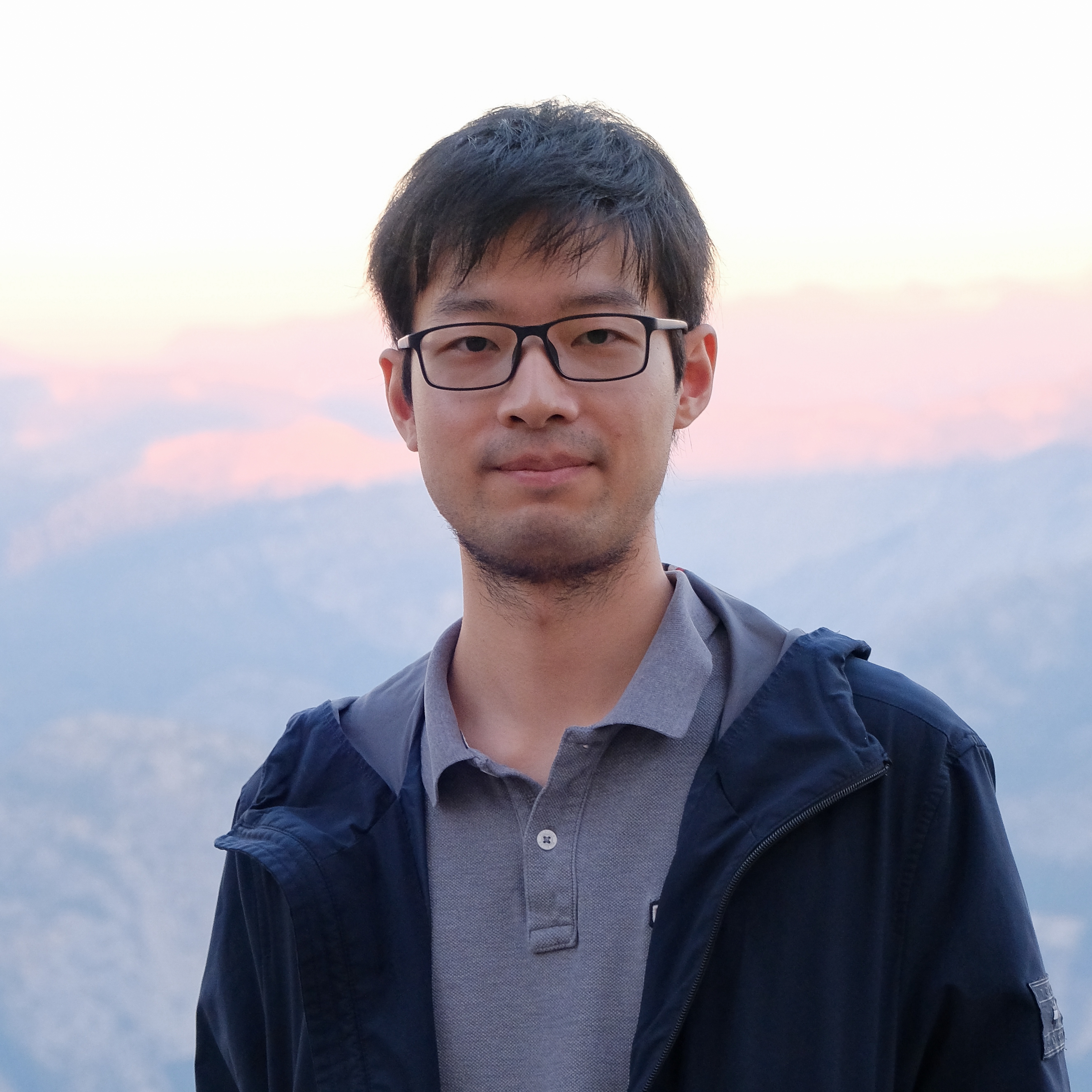

I am an Assistant Professor at Department of Computer Science and Engineering, University of California, Riverside. I completed Ph.D. at UCLA Computer Science Department in 2025, advised by Prof. Cho-Jui Hsieh. I received my B.Eng. degree from the CST department at Tsinghua University in 2020.

My research focus is on machine learning (ML), especially developing more trustworthy and reliable AI models. Specifically, some of my current interests include but are not limited to: the interplay between generative AI, verifiers and formal methods; LLM alignment; formal verification for ML and training verifiable ML models for mission-critical applications. robustness and safety for ML models;

Group

PhD students

- Shangjian Yin (Fall 2025-)

Prospective Students: I am actively looking for motivated students to join my group, including prospective Ph.D. students (Fall 2026 admission, please see this page).

Awards

- NVIDIA Academic Grant Program Award, 2025

- UCLA Dissertation Year Award (fellowship), 2024-2025

- Amazon Fellowship (Amazon & UCLA Science Hub fellowship), 2022-2023

- 4X first-place winner at the International Verification of Neural Networks Competition (VNN-COMP), 2021-2024